Understanding How Autofocus in Mirrorless Cameras Works and Its Limitations

It’s been some time since the days of purely manual focus lenses, and technology is moving forward at an ever-increasing rate. Today’s autofocus systems are almost incomparable to even the high-end DSLRs of 10 years ago. However, they still can’t read minds so it’s good to have a sense of how they work and where they can fail.

In this article, I’ll focus on the basic concepts of autofocus without getting too bogged down by technical details. These technical details vary from manufacturer to manufacturer and are protected meaning that they aren’t even publicly available. In addition, new software-related technologies are being introduced so the algorithm may behave differently in the next generation of cameras. It is common for your existing device to get new capabilities with firmware updates.

Updates must be done manually. If you don’t normally do this, I recommend checking the manufacturer’s website for a new version of the corresponding firmware.

For this article, I’ll be demonstrating autofocus on my Canon R5, but other mirrorless cameras work similarly.

Two main technologies are important for understanding autofocus—phase detection and contrast detection. Let’s take a look at each of these in greater detail.

Phase detection

The concept of phase detection goes back to the days of single-lens reflex cameras, only it’s undergone major improvements since then. In order to understand the whole concept, I’ll start by explaining how the human eye sees objects.

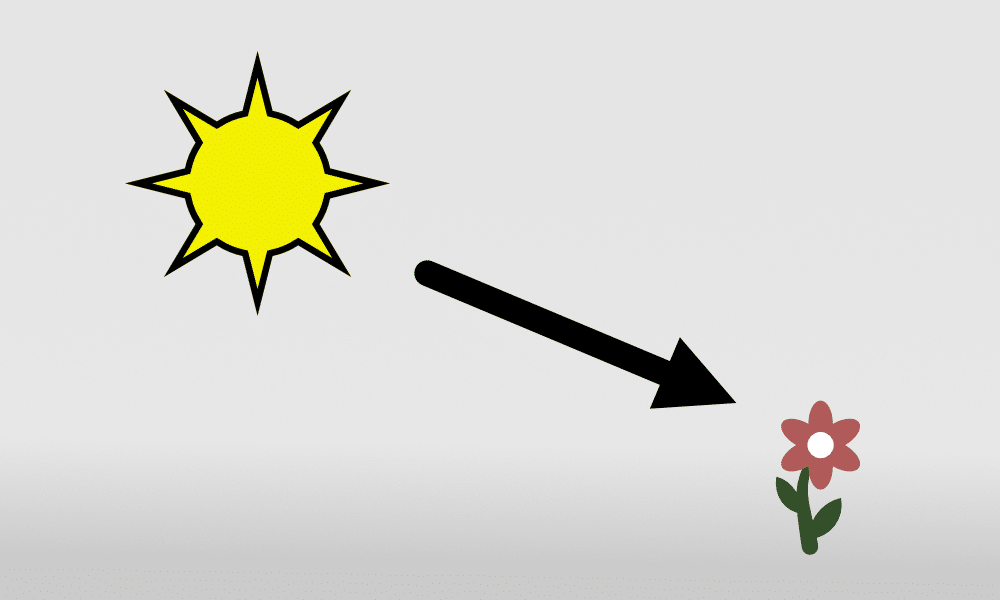

It all starts with light from the sun or other light source.

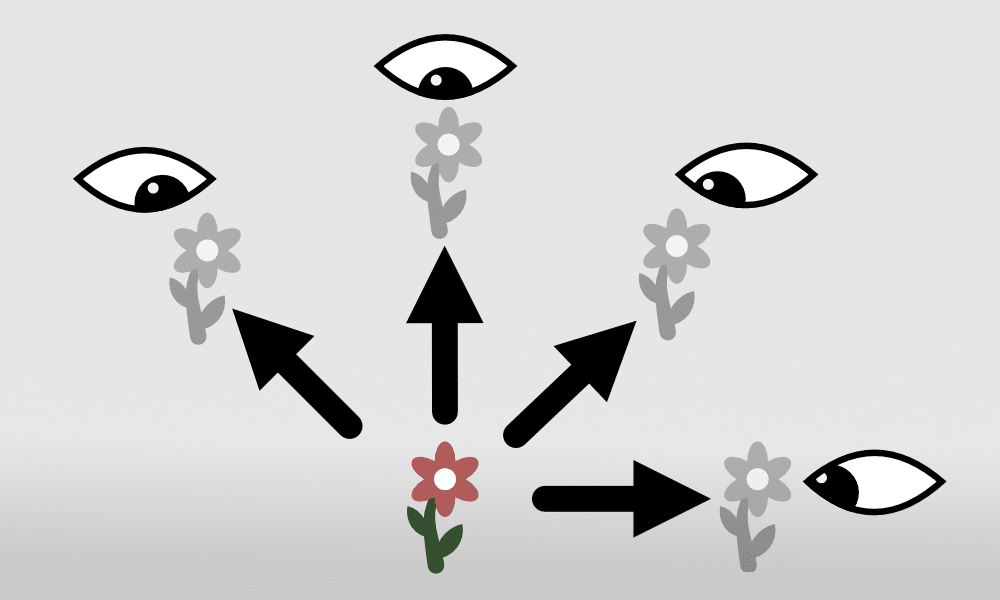

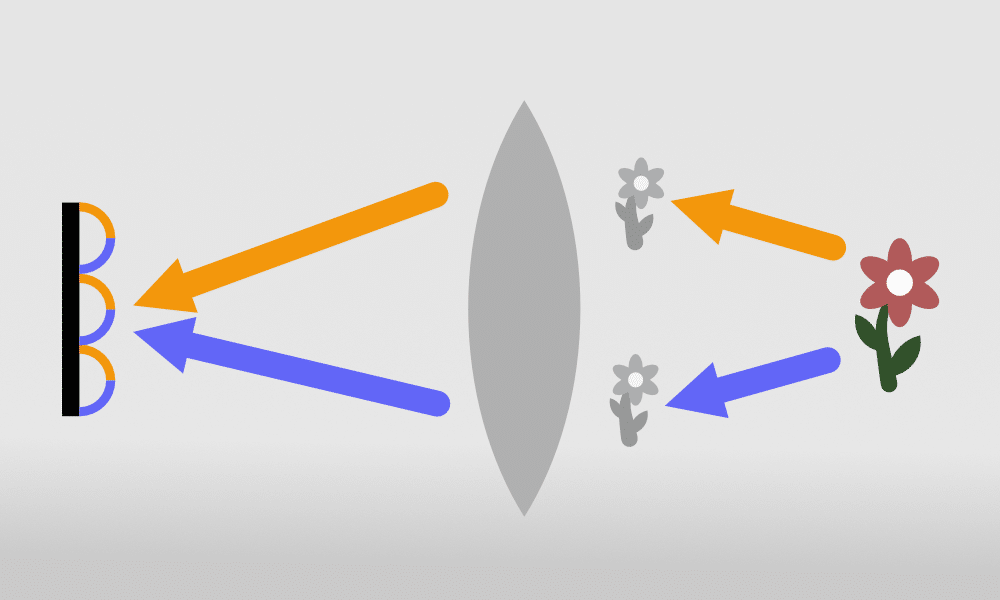

The incoming light bounces off the object in all directions (for the sake of simplicity, an ordinary diffuse reflection). This is why it can be seen from any angle. Light is then reflected in all directions.

The lens collects and focuses several such images onto one place—the sensor. The faster the lens, the larger the bundle of images it can collect.

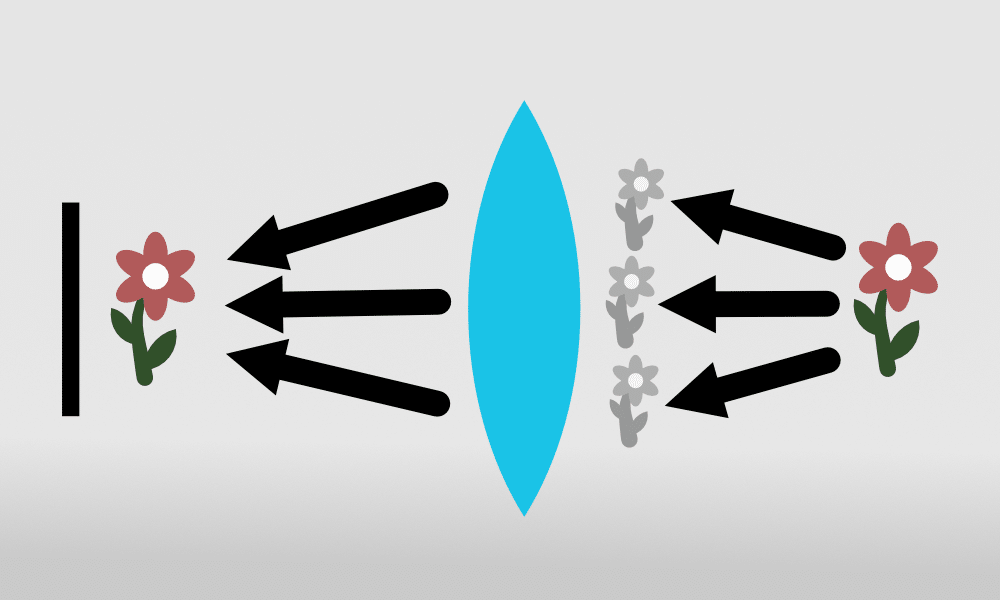

In DSLRs, there was a special second sensor that looked in different directions so it could distinguish images coming from different directions for comparison.

If the images are properly focused, they can be superimposed at the focus point. If not, they are offset from one another. It is using this degree of displacement that the camera can calculate how much it needs to move the lens motor to get everything in focus.

With mirrorless cameras, no special chip is needed, and the main sensor handles “looking” in different directions. Each of the pixels is split in half, so it can distinguish light from the left and right.

If you then want to focus on an object in the top right, a group of adjacent pixels in that area is selected and these parts of the incoming images are compared. This allows the camera to focus accurately.

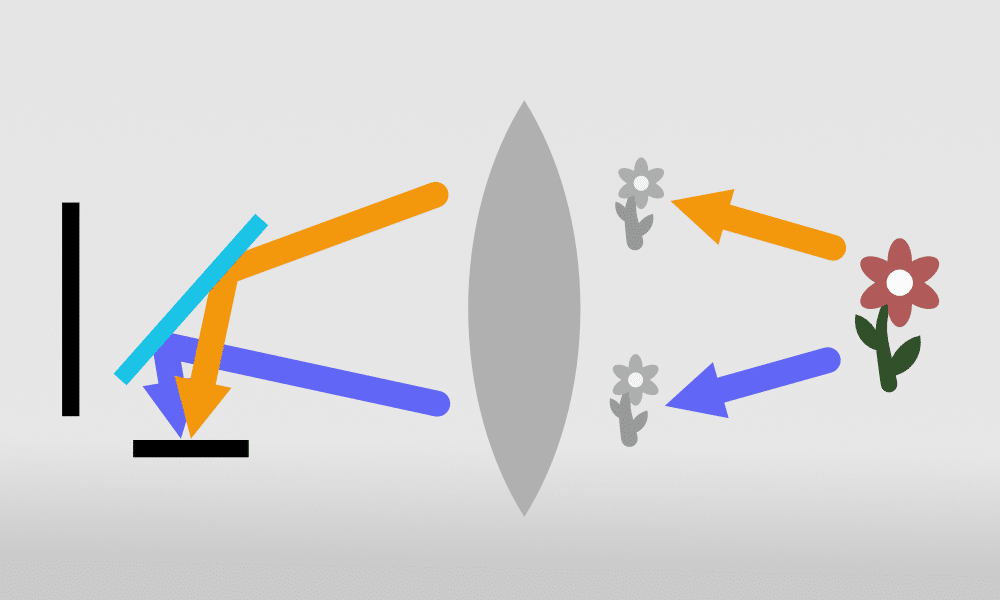

Phase detection, though modified, is not revolutionary in mirrorless cameras. Higher-end DSLRs with multiple focus points will have the same, if not better autofocus. DSLRs usually detect light not only coming from the left and right, but also from above and below. And in some cases, even at an angle from the sides.

Mirrorless cameras currently have trouble focusing on repetitive, horizontal objects like window blinds. In the future, it’s possible that pixels won’t be split in two, but perhaps four or more parts, which could improve autofocus functions.

Phase detection is just a precursor to the second, truly revolutionary autofocus technology.

Contrast detection

Contrast detection partially existed in DSLRs. If you didn’t choose a specific focusing point, then the camera would select the nearest object for you. Some DSLRs were also capable of face detection, like Nikon’s 3D-tracking face detection.

However, the contrast detection was done with the help of a secondary chip with a low resolution of thousands of pixels. Mirrorless cameras have taken everything up a level and detect objects using the main sensor at a resolution of millions of pixels. The advancement of processors that can handle this amount of data also helped.

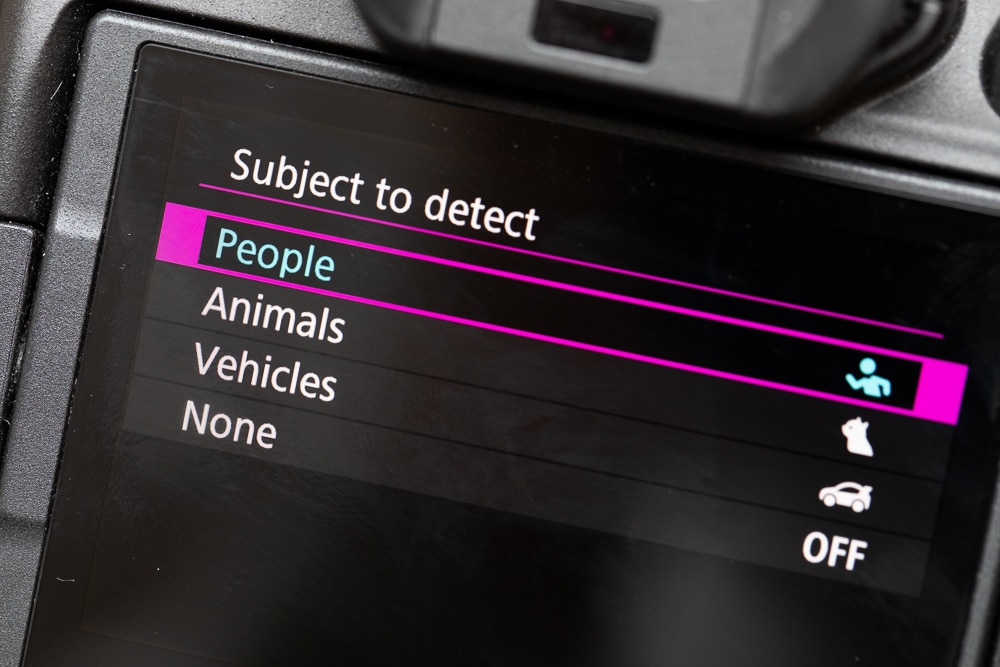

Depending on the settings, mirrorless cameras can automatically detect faces, vehicles, or animals.

People are particularly important subjects in photography, so not only can the autofocus systems focus on faces, but also eyes. Such accuracy is required for fast lenses, where every inch that isn’t in focus is noticeable.

You can switch between eyes or subjects using the buttons. For scenes with unusual objects, the photographer has the option to select their own subject for the autofocus system to follow.

Continuous autofocus

I’ve written about both autofocus systems as complete processes. However, it’s worth remembering that if you have continuous autofocus set, both run continuously, keeping the target object in focus at all times.

Canon R5, Sigma 150-600/5-6.3 Contemporary, 1/4000s, f/6.3, ISO 1600, focal length 324mm

Practical insights

Autofocus, especially with eye detection, is a major breakthrough in comparison to DSLRs. The camera can focus very quickly on its own and keep the selected eye in focus even when the camera or subject is moving. Photos are rarely ruined by focus that is off by several inches or by focusing on the background accidentally. The percentage of out-of-focus images has been significantly reduced.

However, autofocus is still not perfect and probably never will be. That’s why the photographer should always pay attention if the camera chooses the wrong eye to focus on, the wrong subject, or the algorithm loses track of the selected object in the scene.

For this reason, it is a good idea to have an additional button dedicated to focusing. I usually focus on the center point, which sometimes saves pictures, especially in fast reportage situations.

Truly revolutionary

I’m thrilled with the advances in autofocus. My success rate for shooting has increased significantly compared to DSLRs. All that’s left to figure out is what to do with the huge number of good photos I have to sort through after a shoot.

There are no comments yet.