Sensor Technologies: What You Can Expect From Your Sensor

Most of today’s camera sensors are based on a single design. But besides its benefits, that design also has its negatives, which can end up reflected in your photos. Because of this, manufacturers are always seeking better, more functional alternatives that can cut back on the drawbacks. Read on to find out how their various solutions differ and what you can expect from your sensor.

Naturally you don’t need to know sensor technologies down to the last detail, but an overall idea will definitely help you. For example when you’re buying a new camera, or if you’re seeing strange errors in your pictures—the kinds of errors that sensors can cause.

Today’s Typical Sensors

The vast majority of equipment, no matter whether it’s phones or dedicated cameras, uses CMOS sensors (Complementary Metal Oxide Semiconductor), which were invented towards the end of the 1970s.

Every chip contains millions of cells/pixels that can transform light into electrons. When a picture is taken, an accumulated charge is left in every one of the chip’s cells; these charges are read to determine how much light fell onto each given spot.

It looks simple, but there’s a catch. On its own, this system is unable to distinguish colors.

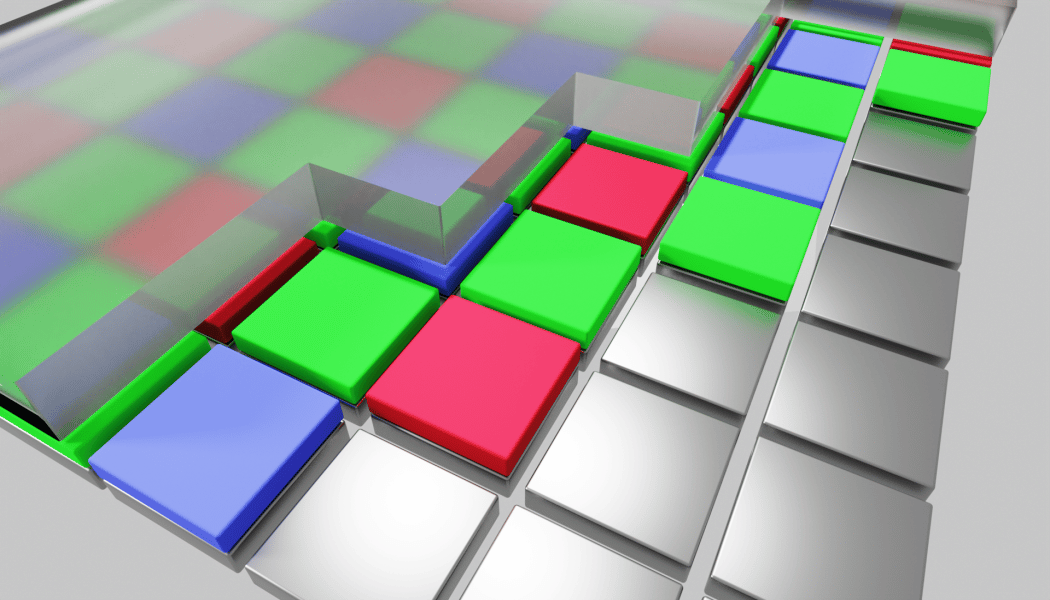

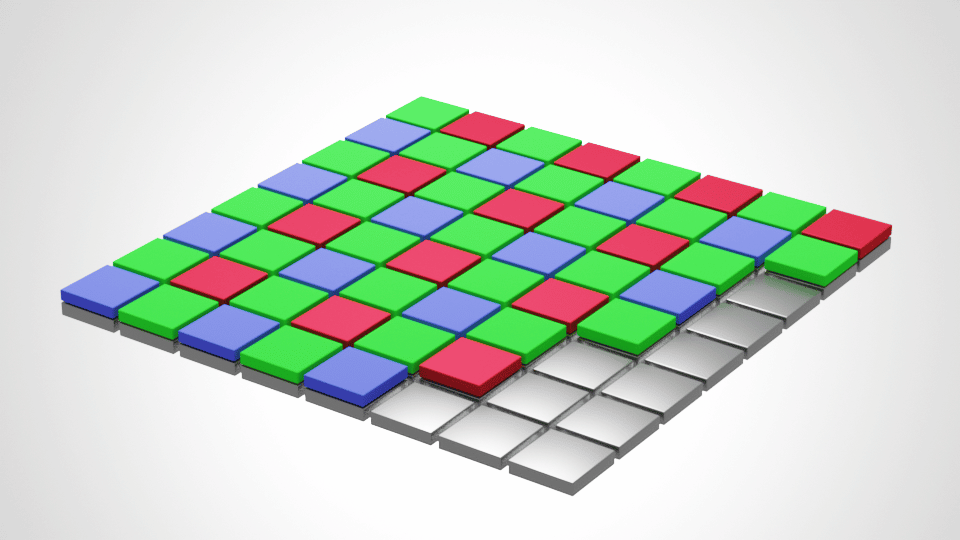

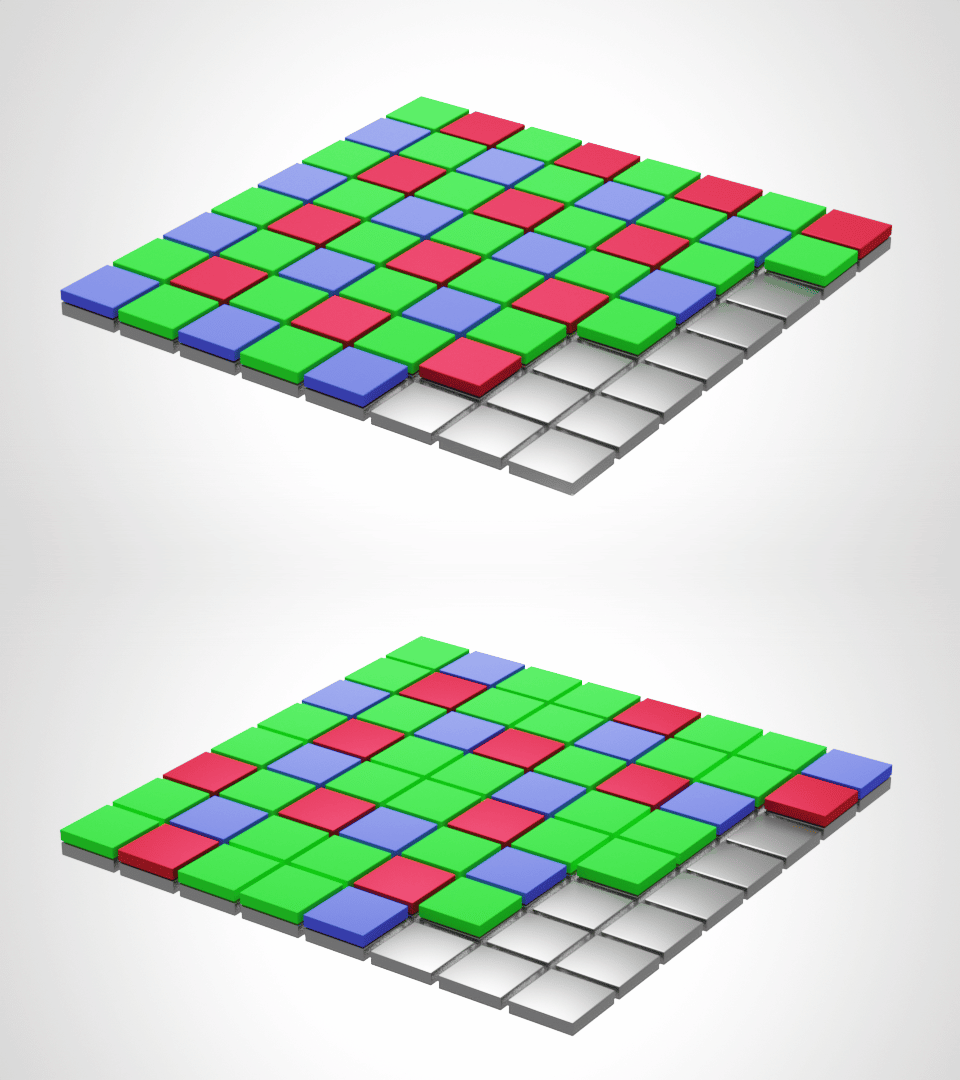

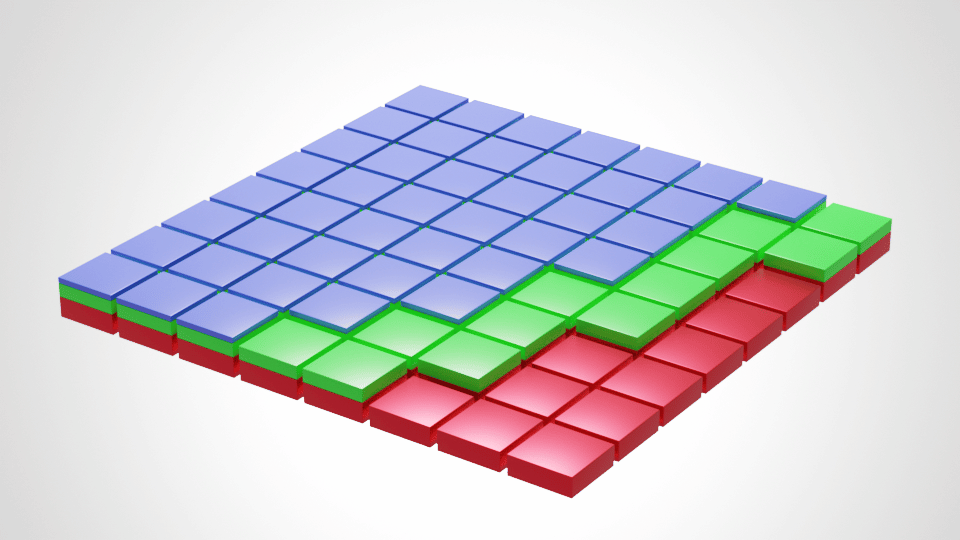

Because of this, it uses a trick, which is named after that trick’s inventor: the Bayer Filter, or Bayer mask. A red, green, or blue filter is placed in front of every cell, and it only allows light of the given color to pass through. Together, these filters form a regular grid of cells, with each one being sensitive to a different light frequency.

Because of this, every pixel only records a single color, and the other two colors are calculated later based on the neighboring cells. Therefore, the sensor illustrated here would give us a photograph with a size of 8 x 8 pixels.

You may notice that while the red and blue cells are roughly equal in number, there are twice as many green ones. This is because the human eye is by far the most sensitive to green, and so it makes sense to capture the most information for this channel.

Let’s note as an aside that CCD sensors once battled with CMOS sensors for their place in the sun. These sensors work in the same basic way; they just read information differently (more pixel-by-pixel than row-by-row). Today they’re only used in special cases in specific devices.

The Problems With the Bayer Filter

The sensor design I’ve described here has many nice properties, but also a few downsides:

- Reduced resolution

- Lost light

- Aliasing (false colors, etc.)

Reduced Resolution

The sensor in the image above was advertised as one that produces 64-pixel images. And yet in reality it only captures one third as much information, obtaining the rest computationally.

The stated resolution is thus misleading. If you had a 30-megapixel camera with this setup, in reality it would have to make do with cells corresponding to about 10 megapixels (albeit cleverly positioned ones).

Lost Light

The tiny color filters mean that any light that hits the sensor, but hits the wrong color in the Bayer mask, will be ignored. The sensor won’t learn of it at all.

It’s said that over half the light hitting the sensor is lost this way. If we could find a way to process this discarded information, noise would be reduced, and taking pictures under weak light would be somewhat easier.

Aliasing

The last problem is by far the most treacherous. The Bayer mask does fantastic work with swaths of color—it checks the values for red, green, and blue at regular distances, and since its calculations fill in (interpolate) pixels into the missing spaces, you get a wonderful swath of color with a smooth gradient.

But this approach falls short when it runs into any sort of real-world fine, regular structure with quickly alternating colors. One typical example is clothing with fine fibers, which will produce either imaginary patterns or bad colors in your pictures. This effect is called moiré.

Aliasing is much more likely to occur in man-made, regular structures (like woven textiles or distant skyscrapers) than e.g. when taking pictures of landscapes.

How Camera Makers Deal With Aliasing

The above-described problems in pictures don’t actually appear all that often. That’s because camera makers have already taken these pitfalls into account, and they typically place a filter layer just before the Bayer mask to blur the image slightly. Thanks to this layer, even fine structures spread out into neighboring pixels and thus are recorded across the entire color spectrum. This layer is called the low-pass filter, and most cameras have one.

Its disadvantage is clear: your pictures would be sharper without this filter.

As megapixel counts grow larger, the problem of aliasing grows smaller, and so some manufacturers have decided to offer, for some of their models, versions with and without a low-pass filter (or with a different filter that compensates for the low-pass filter). This applies e.g. for the Nikon D800 and D800E (36 megapixels; the latter is filterless) and the Canon 5DS and 5DS R (50 megapixels; the latter is filterless).

Leica went for a more extreme approach with its Model M Monochrom, which has neither a low-pass filter nor a Bayer mask and shoots purely to black and white. This avoids both problems with bad colors and the other problems with the Bayer mask, i.e. loss of light and resolution. Naturally you pay for this through the need to shoot purely to black and white.

Fujifilm, meanwhile, has chosen a milder solution. It doesn’t use a low-pass filter, and it has replaced the original Bayer mask with its own X-Trans filter. In this filter, the individual colors over the pixels have been rearranged slightly so as to produce less regular structures, thus preventing any major color errors.

Pentax uses an elegant system: it has a sensor with a Bayer mask, but no low-pass filter. Whenever the user decides that a blurring filter would be useful, they flip a setting in the menu to make the camera use its stabilization system to shake the sensor slightly during shots. These are vibrations on the order of less than one pixel, which is precisely enough to eliminate aliasing. When maximum sharpness is needed, this effect can be turned back off again.

One option that is becoming more and more widespread is an option to use the system originally intended for sensor stabilization to quickly take several shots shifted by precisely 1 pixel in various directions in a row. These shots are instantly merged to obtain information on all of the colors in every pixel. Sometimes resolution is similarly increased as well. You’ll find this solution under the name multi-shot.

Many visible aliasing errors can also be resolved using computer edits, but it’s obviously impractical and time-consuming to deal with too many pictures with these problems.

Foveon

Concept-wise, there are also other sensors out there besides the CMOS with its Bayer filter. Sigma’s Foveon is one example; here, every color is recorded in every pixel. The Foveon makes use of the fact that, when passing through light-sensitive quartz, different light frequencies penetrate to different depths. Every pixel is made up of three cells piled on top of each other, with fine-tuned thicknesses for each one. The light passes both into them and through them. Only the red element penetrates into the lowest cell; the middle cell sees red and green; and the highest cell captures all three colors including blue. The specific values of the individual color elements are then determined using simple mathematics.

However, this system is not yet unambiguously better than the CMOS system in general use. For now, the Foveon’s superior delivery of color details is counterbalanced by increased noise and minor imprecisions in color.

Here as well it’s important to look at the resolution, because manufacturers—wishing to achieve marketing numbers similar to everyone else with their Bayer filters—use similar mathematics and multiply every pixel by three. Thus a camera declared as having 60 megapixels will in reality “only” have 20 million, with each one containing three cells for different colors beneath it..

Lytro

As a curiosity, I’d like to also mention the company named Lytro, which introduced a sensor where every pixel records both the quantity of light and the direction it came from. This lets it determine depth and, for example, focus on any place you choose even after you’ve taken your picture.

Lytro produced two fairly obscure cameras for the general public, and then moved on to business for film professionals, but not even that worked out well for them, and for now they are no longer developing this technology.

Everything’s Getting Better

That’s it for the main approaches used in photographic sensors. But manufacturers are still continuing their efforts, and besides the technique used, they’re also improving the electronics behind the pixels. Both the electronics that acquire data from the scene, and other electronics that process this data. Because of this, we’re all getting better pictures every day, and in conjunction with computational photography and the power hidden inside our tiny devices, we have plenty to look forward to.

There are no comments yet.